Mastering LLM Architectures: A Simple Guide to MCP, Agentic AI, and RAG for Enterprise AI Adoption

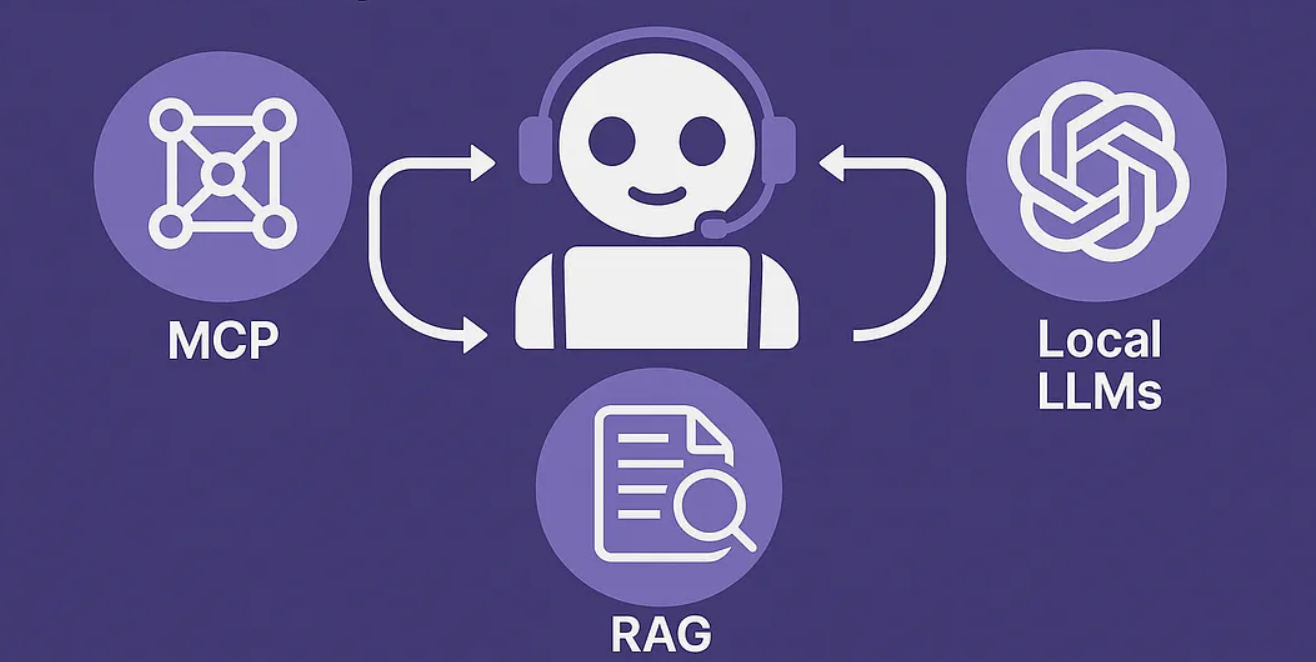

Explore LLM architectures: Model Context Protocol (MCP), Agentic AI, & Retrieval Augmented Generation (RAG). Understand their flows, challenges, and ideal use cases for enterprise AI adoption. Discover the future of hybrid AI models.

In today’s rapidly evolving landscape of Artificial Intelligence, Large Language Models (LLMs) are at the forefront of innovation. For businesses looking to integrate AI, it’s crucial to understand how these powerful models can connect with data and execute complex tasks. This guide will break down three leading approaches to leveraging LLMs: Model Context Protocol (MCP), Agentic AI, and Retrieval Augmented Generation (RAG). We’ll explore how each works, their unique benefits, and their challenges, helping you decide which fits best for your enterprise AI journey.

Comparing three Large Language Model (LLM) architectures: Model Context Protocol (MCP), Agentic AI, and Retrieval Augmented Generation (RAG), outlining their distinct mechanisms and applications. MCP offers direct, real-time data access without vector storage, ideal for low-latency scenarios, while RAG uses vector databases for contextual retrieval from static information, facing challenges with real-time updates and storage overhead. Agentic AI involves multiple coordinated agents for complex tasks but suffers from high latency and resource intensity. Both sources emphasize that each approach has unique trade-offs, suggesting a future where hybrid models combining their strengths may dominate enterprise AI adoption.