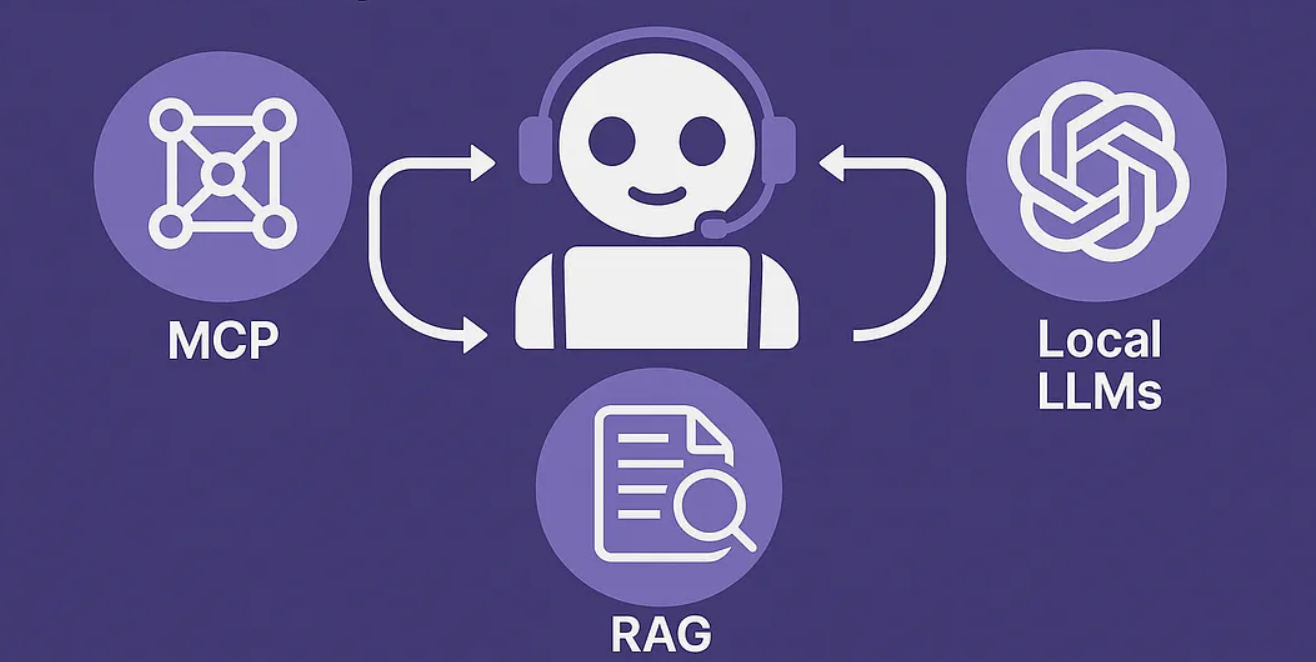

Mastering LLM Architectures: A Simple Guide to MCP, Agentic AI, and RAG for Enterprise AI Adoption

3. Retrieval Augmented Generation (RAG): The Knowledge Base Enhancer

Often, an LLM needs to access a vast amount of existing information to provide accurate and detailed responses. This is where Retrieval Augmented Generation (RAG) becomes incredibly valuable.

What is RAG?

RAG enhances LLMs by first retrieving relevant information from a dedicated knowledge base before generating a response. Think of it as an LLM that can quickly look up facts in a comprehensive library before answering your question.

How it Works (The Flow):

- User Query: You ask a question (e.g., “What is the history of the internet?”).

- Retrieval Query: Your query is transformed to search the knowledge base.

- Embedding: Your query, and all the documents in the knowledge base, are converted into numerical representations called “embeddings”. These embeddings help the system understand the meaning of words and phrases.

- Vector DB: These embeddings are stored in a special database called a “vector database”.

- Retriever: The retriever then searches this vector database to find “chunks” (small pieces of text) that are most similar in meaning to your query.

- Generator: The LLM (acting as the “generator”) receives both your original query and these retrieved chunks of information.

- LLM & Answer: The LLM then uses this combined information to generate a comprehensive and contextually rich answer.

- App & User: The answer is delivered to you through an application.

Key Challenges & Why They Matter:

Despite its power, RAG has limitations:

- Static Embeddings: The embeddings are usually precomputed, meaning they are created beforehand and don’t automatically update when the underlying data changes.

- Context Staleness: Because embeddings are static, the information they represent can become outdated, especially in fast-changing areas like news or social media.

- Storage Overhead: Storing all these embeddings in a vector database requires significant storage space, which can be a concern for very large datasets.

- Semantic Gaps: Embeddings might not always capture the full, nuanced meaning of text. This can lead to the retriever fetching incomplete or irrelevant information. For example, a query about “Apple” might mistakenly retrieve information about the fruit instead of the technology company, depending on the embedding quality.

- Limited Real-time: Due to its reliance on precomputed data, RAG struggles with truly real-time data updates compared to MCP.

Real-World Examples & Use Cases:

RAG excels when you have a large, relatively stable knowledge base.

- Legal Research: Retrieving specific clauses or case precedents from a vast legal library.

- Historical Data Analysis: Answering questions based on archives or historical documents.

- Internal Company Knowledge Bases: Providing employees with answers drawn from policy documents, manuals, or past project reports.

Highlighting Point: RAG remains a practical and powerful option for knowledge retrieval in stable domains where data doesn’t change frequently.