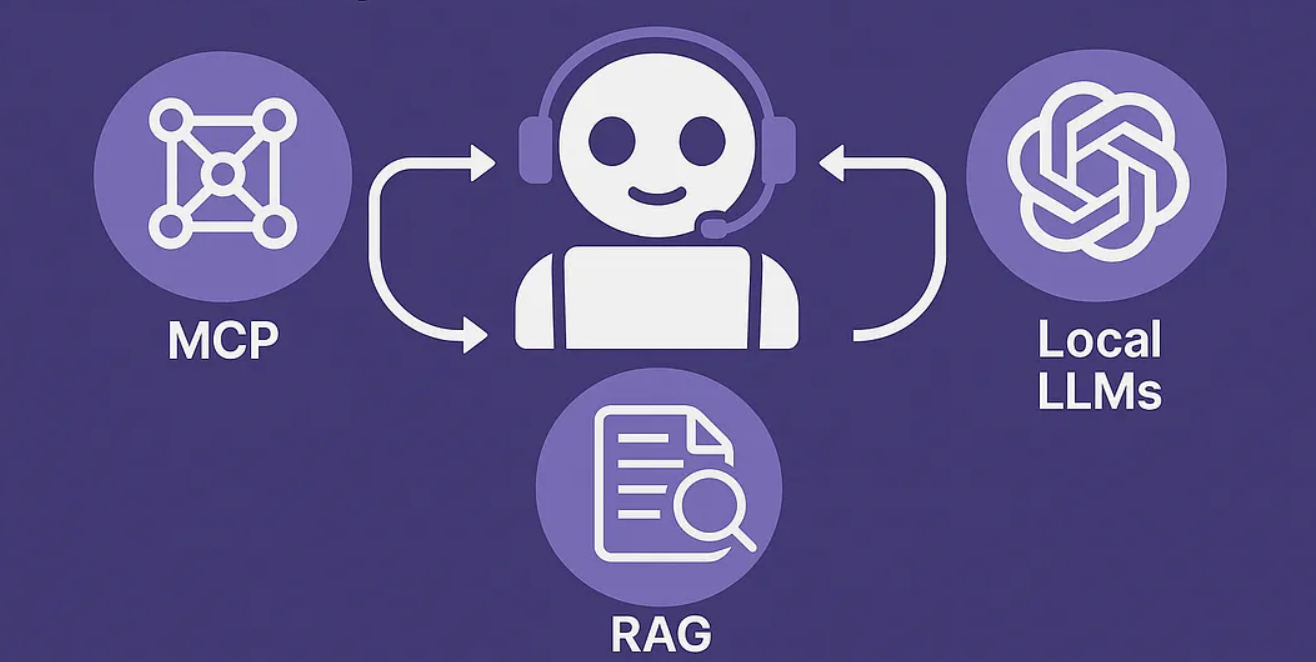

Mastering LLM Architectures: A Simple Guide to MCP, Agentic AI, and RAG for Enterprise AI Adoption

Comparing the Architectures: Choosing the Right Tool

Each of these LLM architectures offers unique strengths and weaknesses, making them suitable for different scenarios.

- Model Context Protocol (MCP):

- Best For: Real-time, low-latency applications with dynamic (constantly changing) data needs.

- Trade-off: May not offer the deep semantic understanding that vector-based systems like RAG provide.

- Agentic AI:

- Best For: Complex, multi-step tasks that require collaboration among different AI functionalities.

- Trade-off: Suffers from high latency, significant resource demands, and difficulties in debugging, making it less practical for smaller-scale or simpler applications.

- Retrieval Augmented Generation (RAG):

- Best For: Leveraging large, static knowledge bases to provide detailed, context-rich answers.

Trade-off: Struggles with real-time updates and can sometimes have semantic inaccuracies due to the nature of embeddings.