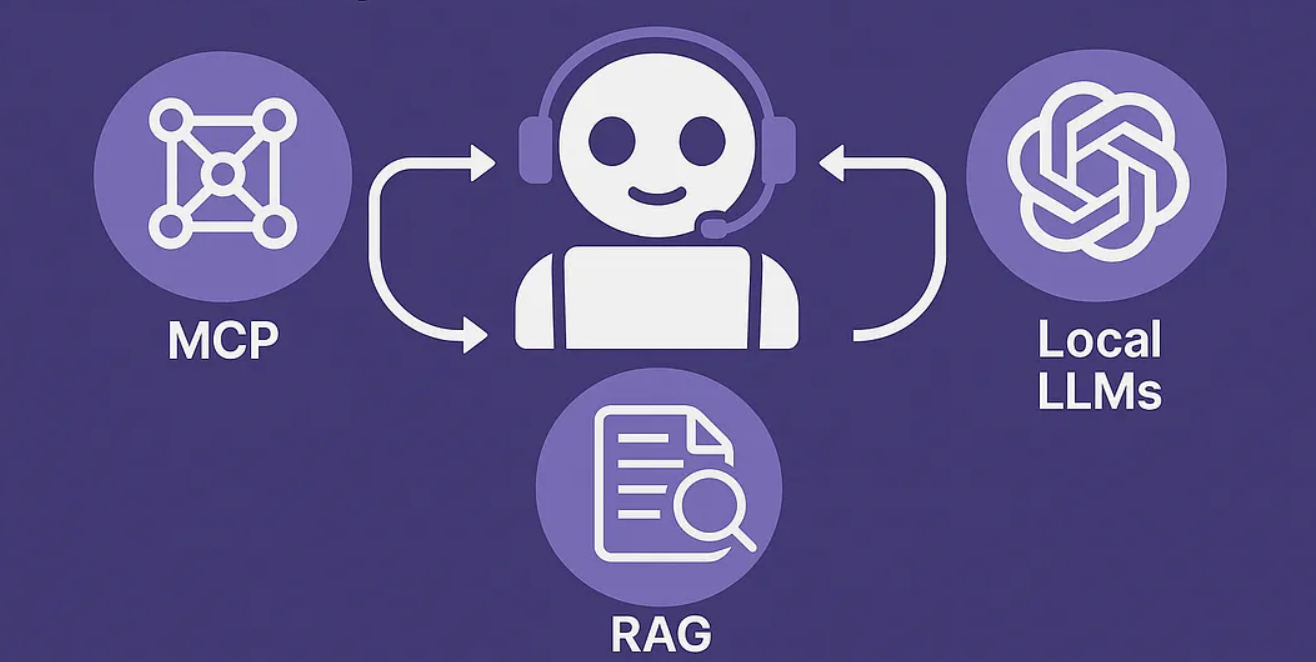

Mastering LLM Architectures: A Simple Guide to MCP, Agentic AI, and RAG for Enterprise AI Adoption

The Future is Hybrid: Combining Strengths

As enterprises increasingly adopt AI, it’s becoming clear that a “one size fits all” approach is unlikely to dominate. Instead, the future likely lies in hybrid models that combine the best elements of these different architectures.

For instance, some systems are already exploring ways to integrate real-time data access (like MCP) with RAG techniques, perhaps by using dynamic embeddings to keep information fresh. Imagine a system that uses MCP for live updates but falls back on RAG for deep dives into historical, stable data. This “layered retrieval strategy” could offer the best of both worlds.

Understanding these nuances is vital for effective AI adoption within any enterprise. The key to long-term success may depend less on picking a single architecture and more on your organization’s ability to orchestrate and combine these powerful approaches effectively.

Final Thoughts

The evolution of LLM architectures is geared towards enabling AI to interact more seamlessly with external data and execute increasingly complex tasks. By understanding MCP, Agentic AI, and RAG, you’re better equipped to design and implement AI solutions that truly meet your organization’s specific needs and push the boundaries of what’s possible with artificial intelligence.

#LLMArchitecture #AIInnovation #EnterpriseAI #MCP #AgenticAI #RAG #ArtificialIntelligence #TechTrends #MachineLearning #AITutorial #DataIntegration #AIStrategy #DigitalTransformation